NLP

Natural Language Processing

Classic style

What we saw last time

- APIs

- The web as a gigantic API

- the REST protocol

- inspecting a website (hack 101)

-

building datasets from APIs

- Guided practice on the wikipedia API

Any questions?

Today

- classic NLP

- tokens

- NER: Named Entity Recognition

- POS: Part of Speech

- topic modeling

- text classification : sentiment, hate speech, categorisaton, spam etc

-

some libraries : Spacy.io, NLTK

- Practice on the NYT API to build a dataset and apply classic NLP techniques

At the end of this class

You

- understand what NLP is

- can query the NYT API

- can apply classic NLP techniques to a dataset using Spacy.io

In the News

What caught your attention this week?

YouTube just dropped over 30 new creator tools at its Made on YouTube event, including AI-powered editing and clipping features, the addition of Veo 3 Fast in shorts, auto-dubbing, and more.

https://www.heygen.com/

The Rundown: Researchers at Stanford and the Arc Institute just created the first AI-generated, entirely new viruses from scratch that successfully infect and kill bacteria, marking a breakthrough in computational biology.

The details:

Scientists trained an AI model called Evo on 2M viruses, then asked it to design brand new ones — with 16 of 302 attempts proving functional in lab tests.

The AI viruses contained 392 mutations never seen in nature, including successful combos that scientists had previously tried and failed to engineer.

When bacteria developed resistance to natural viruses, AI-designed versions broke through defenses in days where the traditional viruses failed.

One synthetic version incorporated a component from a distantly related virus, something researchers had attempted unsuccessfully to design for years.

AI’s huge competitive coding win…

the neuron daily ai’s huge competitive coding win

OpenAI’s AI achieved a perfect score at the world’s top programming competition, beating all human teams.

OpenAI’s reasoning models achieved a perfect 12/12 score at the ICPC World Finals, the most prestigious programming competition in the world… and outperforming every human team.

To put this in perspective, the best human team solved 11 out of 12 problems. And OpenAI competed under the same 5-hour time limit as human teams. They used an ensemble of general-purpose models, including GPT-5, with no special training for competitive programming. In fact, 11 out of 12 problems were solved on the first try.

Google’s Gemini 2.5 Deep Think also won gold, solving 10 out of 12 problems. This means two different AI systems both outperformed every human team on the planet. And Google’s performance was equally jaw-dropping:

Gemini solved 8 problems in just 45 minutes and cracked one problem that stumped every single human team.

NLP

from Linguistic to NLP

Ferdinand de Saussure 1916

Linguistics is the scientific study of human language structure and theory

NLP (Natural Language Processing) is the computational field focused on building systems that can understand and generate human language.

Other classic refs

1957 Benveniste : Problemes de linguistique générale

1957 Chomsky : syntactic structures

The basis of Chomsky’s linguistic theory lies in biolinguistics, the linguistic school that holds that the principles underpinning the structure of language are biologically preset in the human mind and hence genetically inherited. He argues that all humans share the same underlying linguistic structure, irrespective of sociocultural differences.

Speech and Language Processing

Speech and Language Processing (3rd ed. draft) Dan Jurafsky and James H. Martin

Latest release: August 24 2025!!

NLP timeline : the early days

| Era / Year | Key NLP Developments | Performance on Tasks |

|---|---|---|

| 1950s–1960s | First MT experiments, e.g., Georgetown‑IBM (1954); generative grammar theories (Chomsky, 1957); ELIZA (1964) | Machine translation primitive; NER not yet formalized; dialogue systems purely rule-based |

| 1970s–1980s | SHRDLU (1970); PARRY (1972); rise of expert systems and handcrafted rules | Language understanding limited to constrained domains |

| Late 1980s–1990s | Adoption of statistical models; NER from news (MUC-7 ~1998); statistical MT replaces rule-based | NER F1 ~93%, nearing human (~97); statistical MT limited scope and quality |

| 2000s | Widespread statistical MT (e.g., Google Translate from 2006) | MT quality improving but far from human level; NER robust in limited domains |

NLP timeline

| Era / Year | Key NLP Developments | Performance on Tasks |

|---|---|---|

| 2010–mid-2010s | Introduction of word embeddings (Word2Vec 2013, GloVe); RNNs & seq2seq models; early neural MT | Embeddings enable semantic similarity; neural MT achieves noticeable improvement |

| Late 2010s | Transformer architecture (2016); BERT (2018); adoption in search engines by 2020 | NER & QA reach human or super-human on benchmarks; MT approaches near-human fluency |

| 2020s (LLM era) | Emergence of GPT-3, ChatGPT, GPT-4, etc. (LLMs dominate NLP paradigm) | Across-the-board excellence: near-human or exceeding performance in translation, NER, summarization, reasoning |

Language is … complicated

Will you marry me? : a marriage proposal.

Will, You, Mary, Me : a card game proposal.

Will, you marry me : a time traveller spoiling the future.

Will you, Mary me : a cavewoman named Mary, trying to make Will, who has amnesia, remember who he is.

- Let’s eat , grandpa.

- Let’s eat grandpa.

Language is … complicated

- Variable length

- Wide variety of complexity across languages

- German:

Donaudampfschiffahrtsgesellschaftskapitän(5 “words”) - Chinese: 50,000 different characters (2-3k to read a newspaper)

- Slavic: Different word forms depending on gender, case, tense

- German:

- Encoding: unicode vs Ascii

- Unstructured data

- code switching

- idioms, Generational lingo, slang

classic NLP problems

- Text mining

- NER: Named Entity Recognition: LOC, PER,

- POS: Part of speech Tagging : nouns, adjectives, verbs, …

- Classification: sentiment analysis, spam, hate speech, …

- Topic identification, topic modeling

- WSD: word sense disambiguation : bank, fly

- STT: speech to text, text to speech

and more difficult tasks such as

- Automated Translation, summarization, question answering

Classic NLP

- deterministic (not probabilistic - LLMs)

- based on the decomposition of text into identifiable elements: words, grammar roles, entities, etc

- applied to sentences, noun phrases, words

- includes pre processing methods of the raw text to facilitate processing

- stop words: and, the, of, etc

- stemming: universities, universal, universe -> univer (meaning is ofetn lost)

- lemmatization: run, running, ran -> run (more efficient than stemming)

- subword tokenization: “unhappiness” → [“un”, “happy”, “ness”]

Requires models, rules that are language specific. Russian or French need different lemmatizers than English.

What’s the unit of text ?

We could work with

- words, syllables, tokens, letters & punctuation

- Bigrams, n-grams: New York, cul-de-sac, pain au chocolat

- noun phrases: group of words that function as a noun: the big brown dog with spots

- sentences, paragraphes, tweets, articles, books, comments

- Corpus: a whole set of text

Need to deal with

- The vocabulary is large / infinite and fast changing

- Typos, multiple spellings

- word forms: plural, declension (home, house), conjugation, …,etc

Subword tokenization

What’s the most efficient unit of text ?

- Using words is problematic: very large vocabulary, multiple forms: plural, declension (home, house), conjugation, …,etc

-

Using letters is too short

- Let’s split words into multiple tokens: subword tokenization

- unhappiness -> un + happ + in + ess / un + hap + pin + ness

- running -> runn + ing

- universities -> uni + vers + iti + es

Benefits of subword tokenization

- no words are OOV: out of vocabulary (gpt 3.5 did not know about COVID)

- captures semantic and morphological meaning better

- Vocabulary Handling : we get infinite vocabulary from finite tokens

That’s also why LLms are very robust wrt to typos and misspellings.

Bag of words for binary classification

you want to build a model that can predict if an email is spam or not spam, or if a review is positive or negative (sentiment analysis)

To train the model you need to transform the corpus into numbers.

The main classic NLP method for that is tf-idf (term frequency-inverse document frequency)

For each sentence, we count the frequency of each word and we normalize it by the number of documents in the corpus that contain the word.

This gives us a matrix, that we can use to train a model.

but this appraoch has multiple problems

- OOV: Out of Vocabulary words are not taken into account

- large vocabulary => huge matrix

- full of zeros

etc

- works for easy tasks (spam, sentiment) but fails for more complex tasks

tf (term frequency) example

Let’s take an example. Consider the 3 following sentences from the well known Surfin’ Bird song and count the number of times each word appears in each sentence.

| about | bird | heard | is | the | word | you | |

|---|---|---|---|---|---|---|---|

| About the bird, the bird, bird bird bird | 1 | 5 | 0 | 0 | 2 | 0 | 0 |

| You heard about the bird | 1 | 1 | 1 | 0 | 1 | 0 | 1 |

| The bird is the word | 0 | 1 | 0 | 1 | 2 | 1 | 0 |

NER: named entities recognition

All types of predefined entities: location, groups, persons, companies, money, etc

NER uses

- Pattern matching: Looks for capital letters, titles (Mr., Dr.), known entity lists

- Context clues: Words like “works at” suggest an organization follows

- Statistical models: Trained on labeled data to recognize entity patterns

NER models and rules are language specific

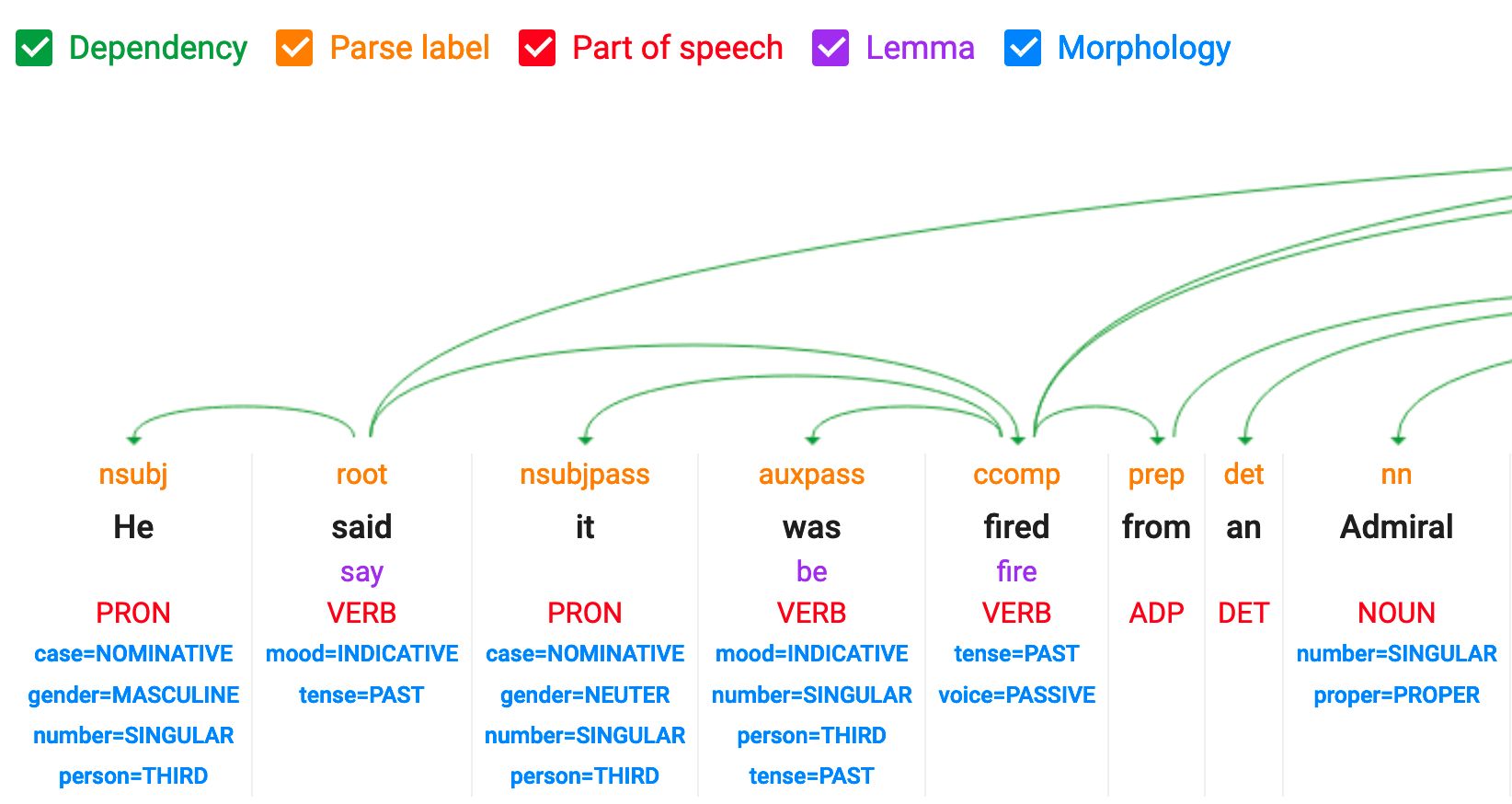

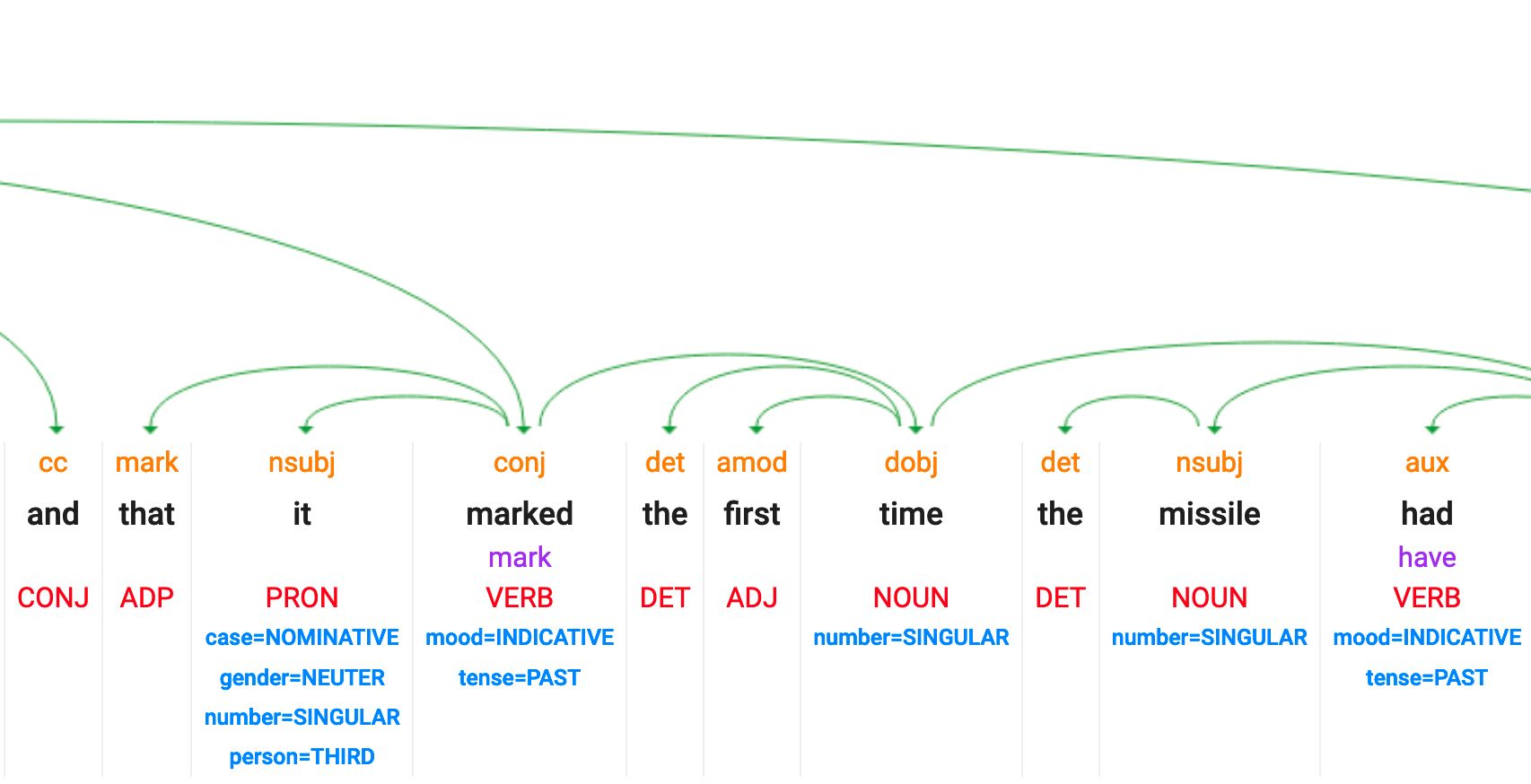

POS: part of speech tagging

Identify the grammatical function of each word : ADJ, NOUN, VERBs, etc

POS uses:

- Word endings: “-ing” often = verb, “-ly” often = adverb

- Position rules: Determiners (“the”) come before nouns

- Context: Same word can be noun or verb (“run” vs “a run”)

POS models and rules are also language specific

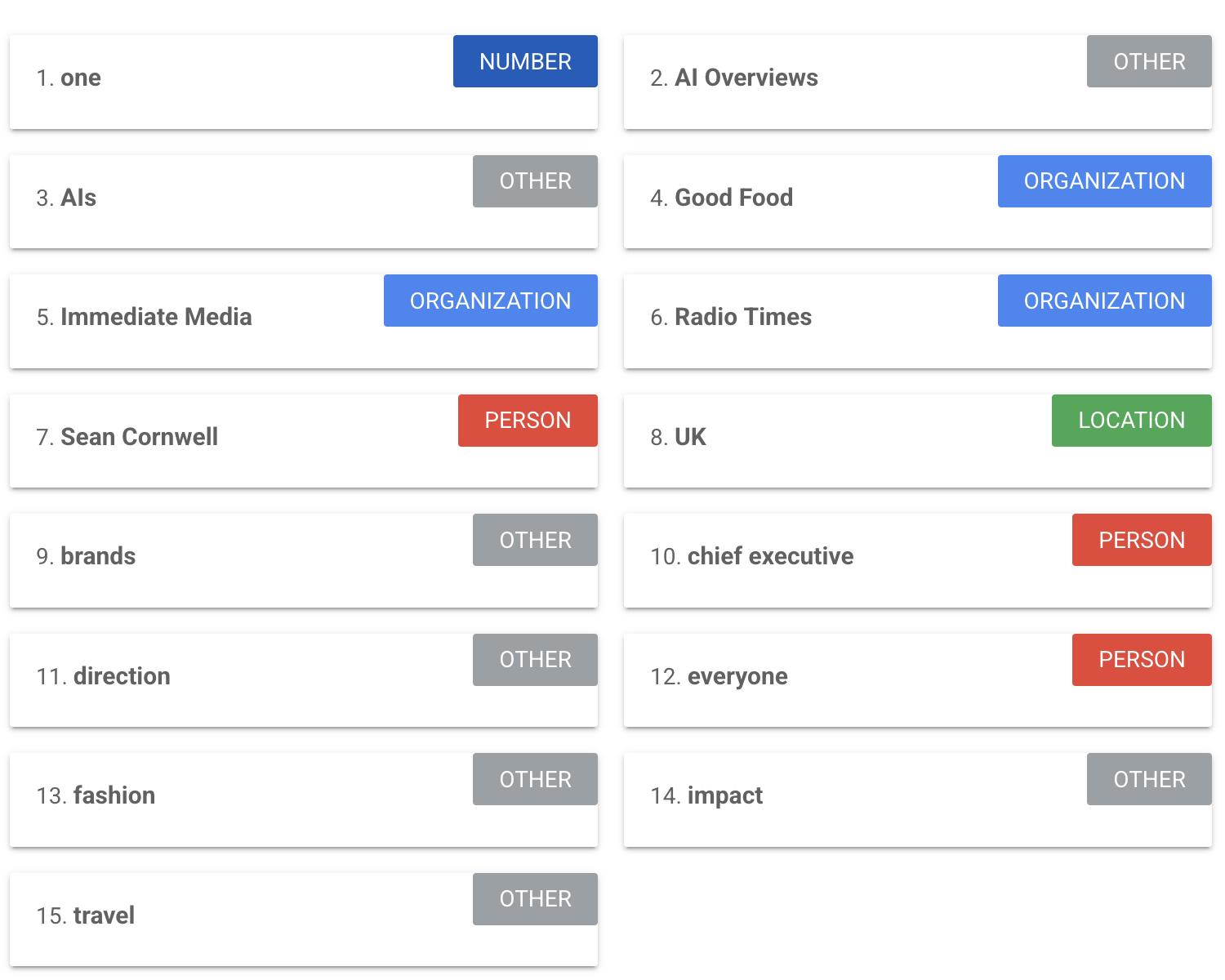

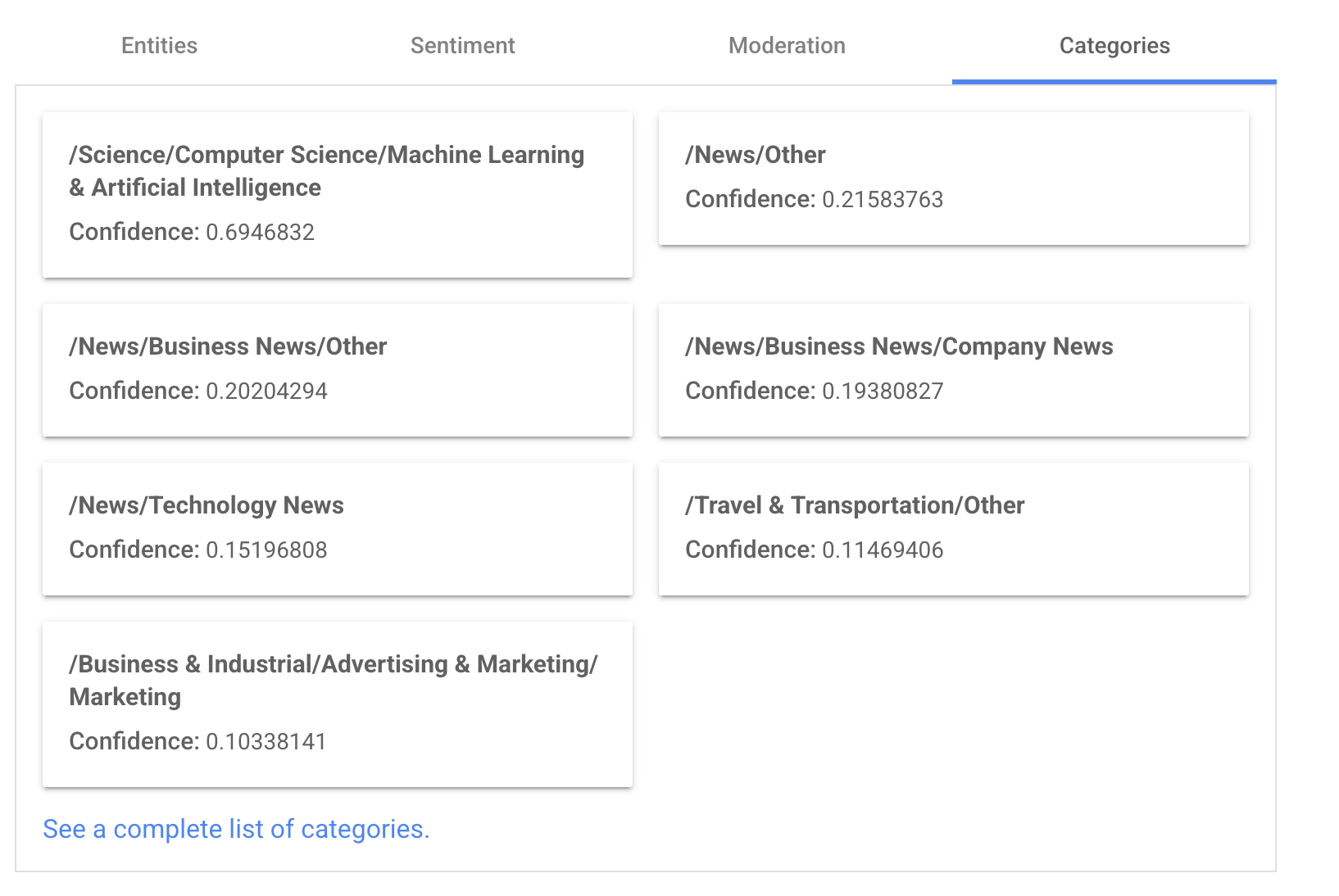

Demo classic NLP : NER and POS

https://cloud.google.com/natural-language

Input some text: (text from FT on AI impact on traffic due to AI summaries in google search)

“Like everyone, we have definitely felt the impact of AI Overviews. There is only one direction of travel; not only are AIs getting better, but they’re getting better in an exponential fashion,” said Sean Cornwell, chief executive of Immediate Media, which owns the Radio Times and Good Food brands in the UK.

NER

Classification

- sentiment scoring

- categories

- Moderation

POS : part of speech and dependency tagging

Unfortunately this features is no longer available in the NLP google demo.

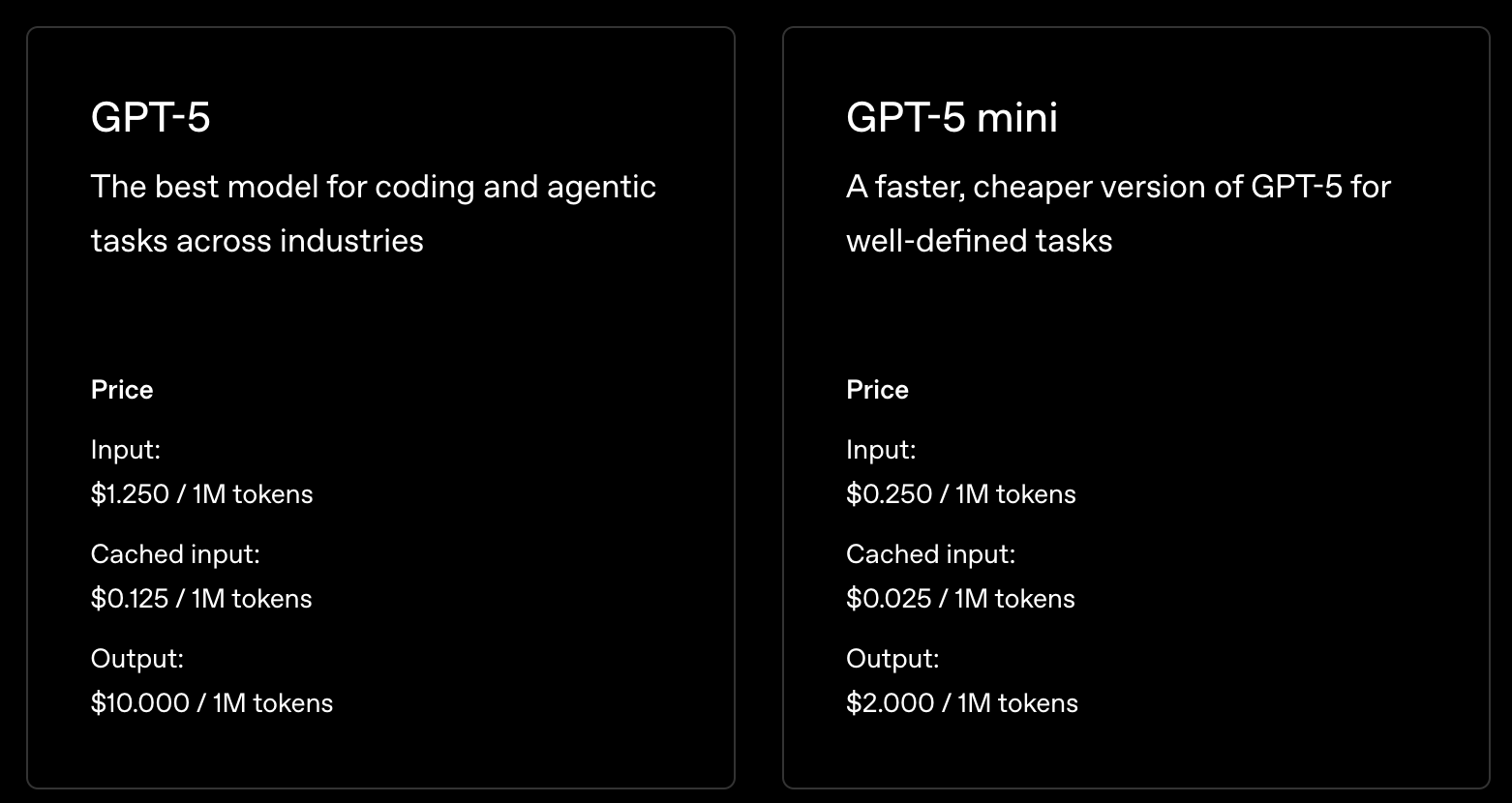

Tokens and LLMs

- Context Window = measured in tokens, not words : 1M token window, 200k tokens window, …

- Pricing Model = cost per token (input + output)

- Non-English Text = more tokens needed

- Token Limits = why responses cut off

- Character-Level Tasks = difficult (LLMs see tokens, not letters)

- Efficiency Varies = by language, domain, complexity

NER and POS with spacy.io

There are few important NLP python libraries : Spacy.io and NLTK

Spacy.io supports 75 languages,

- entity recognition, part-of-speech tagging, dependency parsing, sentence segmentation, text classification, lemmatization, morphological analysis, entity linking and more

=> follow Ines Montani (website) she’s super cool

Spacy code

To use Spacy.io we need to

- install and import the library

- then download a model associated to the language of the corpus.

- Each language offers multiple models with varying sizes

- Each model is trained to handle POS and NER and lemmatization

- Once the model is available we instanciate the spacy object

docon the text we want to analyze - then we can easily extract NER like location or persons with these simple lines

- Similary we can identify all the ADJ and NOUNS in a text

# pip install -U spacy

# python -m spacy download en_core_web_sm

import spacy

# Load English tokenizer, tagger, parser and NER

nlp = spacy.load("en_core_web_sm")

# Process whole documents

text = ("When Sebastian Thrun started working on self-driving cars at "

"Google in 2007, few people outside of the company took him "

"seriously. “I can tell you very senior CEOs of major American "

"car companies would shake my hand and turn away because I wasn’t "

"worth talking to,” said Thrun, in an interview with Recode earlier "

"this week.")

# instanciate the spacy object

doc = nlp(text)

# Analyze syntax

print("Noun phrases:", [chunk.text for chunk in doc.noun_chunks])

print("Verbs:", [token.lemma_ for token in doc if token.pos_ == "VERB"])

# Find named entities, phrases and concepts

for entity in doc.ents:

print(entity.text, entity.label_)

Intermission

Forbidden planet 1956

Robby the robot

Practice

Build a dataset using the NYT API

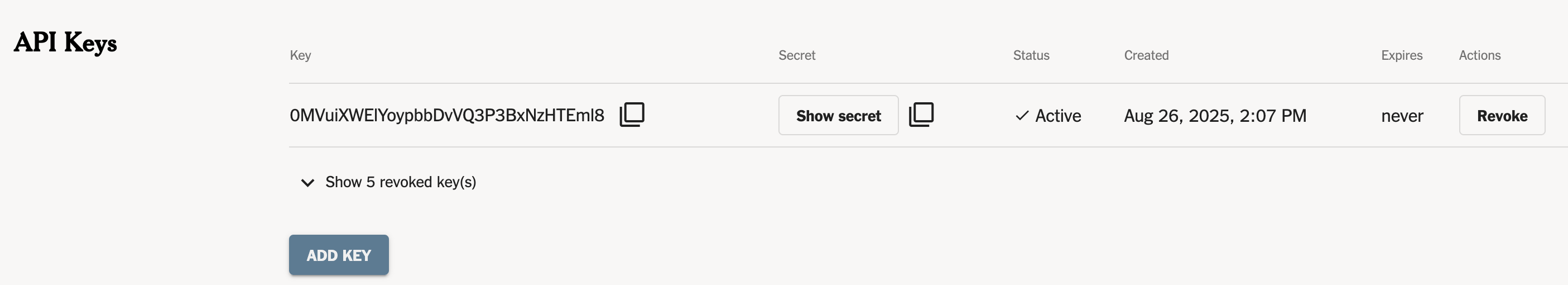

NYT API

Offers free access: developer.nytimes.com/apis

follow instructions on developer.nytimes.com/get-started

- open an account on the NYT developer website

- create an app

- get an API key

An API key is SECRET

Public API keys cost lives and money, …,

ok, …., mostly money

DO not publish your API key publicly

see ref

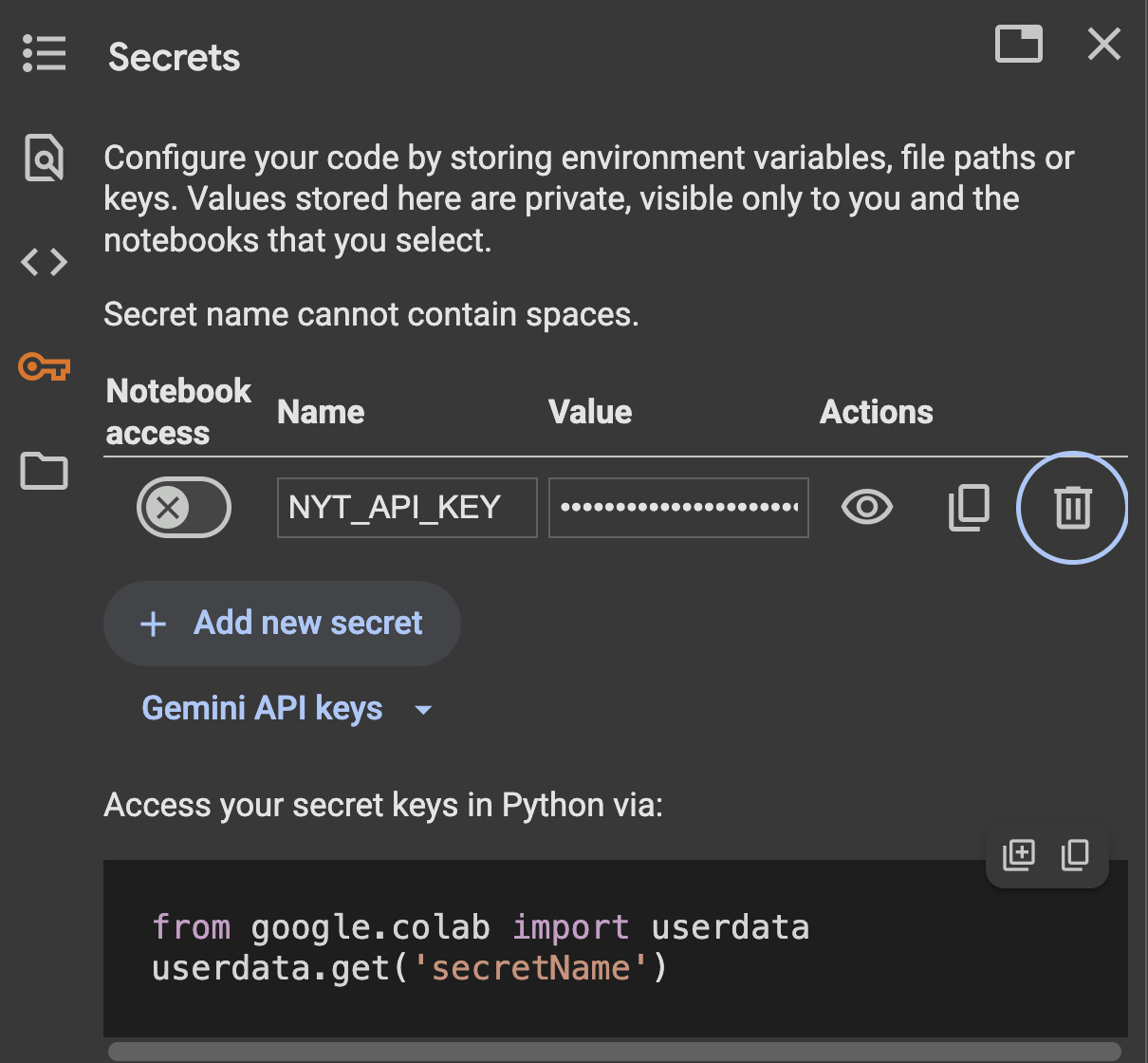

Secret keys in google colab

left menu

add key

Load key in colab

from google.colab import userdata

userdata.get('NYT_API_KEY')

NYT API - Practice

https://colab.research.google.com/drive/1PoFhONvZZxcpIG-_XMoN9wKTT1U7KS7X#scrollTo=aJVkXUfIFjqX

Goal :

- choose a topic, a set of articles

- build a dataset of articles

- extract entities, nouns, verbs, adjectives using spacy.io

- and also save the dataset on your laptop

Next time

- Modern NLP

- Embeddings

- RAG - context window