Project - Create, Validate, Import, Explore

In this project you will create a new MongoDB database from a dataset of your choice.

Your task consists in:

- Choosing an interesting dataset

- Imagining an application that uses that particular dataset

- Importing the dataset into a MongodB server

- and writing about the whole process

You can work solo or in groups of 2 max.

The deliverable is a report on these tasks.

Avoid using Atlas, prefer local MongoDB.

Deliverable

Please fill in the spreadsheet with a link to the dataset, and a link to the google doc that you will submit.

- You must write your report in a Google doc

- Invite [email protected] as editor

This project is graded.

You will have 5' to present your work on Friday Nov 28th. No slides needed, just screen sharing and talk

The project report: A Tutorial

You will write a tutorial based on your experience.

The document must include the following elements:

- justify the dataset choice and its interest

- describe the application that will use it, thinking especially about how the application will exploit the data

- why choose a document store like MongoDB rather than a SQL database

- how the data schema choice aligns with data exploitation by the application

- hardware context

- tools used

Throughout the project,

- bugs, problems, and solutions found

- performance

and finish with

- an exploration of the dataset with queries used in the application

The interesting element in the report is the issues you encountered and how you solved them.

Worth repeating:

- The document must be a Google document.

- Invite me as editor [email protected]

genAI usage

If (hahaha) you use LLMs to write, it's ok, but please

- keep it short

- read and rewrite the text before submitting

- Copy the prompts at the end of the report

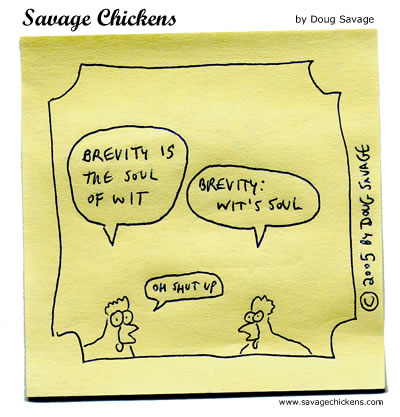

As the bard said: Brevity is the soul of Wit

Dataset Selection

The dataset choice is yours. The only condition is that it must be fairly large, several GB of data

-

The dataset can include long texts (articles, books, ...), IoT data, etc ...

-

The dataset must be large enough to justify using MongoDB. Choose a datase of at least 1Gb

-

Avoid datasets that are mainly images, videos, etc. As this will require a cloud storage solution to avoid storing images directly in MongoDB documents.

Application Design

Start by choosing the dataset and imagining the use of this data by an application.

For example, if we use the data from the 210k trees of Paris:

- field user application for recording tree dimensions

- exploration application for Paris trees for botanists or tourists

- tree management application dedicated to tree type selection and geolocation

When you have an idea of your application, think on how it will consume the data.

What will be the most frequent operations and queries in the application?

For example for the first "field user" application

- find a tree based on its geolocation and species

- record a new measurement of a given tree's dimensions

- visualize tree evolution since planting and compare with other trees of the same species

etc...

Preparation Phase

Prepare your working environment.

Note the technical characteristics of your computer that will influence your import strategy.

Note for example:

- The amount of available RAM

- Free disk space

- The type and speed of your processor

- Your operating system (Windows 11 or MacOS)

Install MongoDB and mongoimport

Then install MongoDB Community Edition.

On Windows 11, you will use the MSI installer available on the official website.

On MacOS, you can use Homebrew with the appropriate command.

Verify that the installation is successful by starting the MongoDB server and connecting to it.

To check if mongoimport is already installed on your local, do mongoimport --version in a terminal. If it returns the version you're fine, otherwise you must install it.

See this page to download command line utilities. The list of utilities is here. It includes mongoimport, mongoexport. see also mongostat and mongotop as diagnostic tools.

Installing Mongo server and tools on windows is usually more difficult than on MacOS or Linux. chatGPT is often a great help for troubleshooting.

Schema Analysis and Design

Before starting the import, examine the original dataset files.

In your documentation, describe:

- The structure of the present data

- Data types for each column

- Potential relationships between different data

- Potential data quality problems: missing values

From this analysis, design your schema validation rules.

Think about the level of validation you want to implement and justify your choice in your documentation.

Import Planning

In your report, note:

- The total size of data to import

- The chosen import strategy (bulk or incremental import)

- The reasons for your choice

- Potential risks and workaround solutions considered

Indexing Strategy

Identify fields that will be frequently queried in your requests.

In your report, specify:

- The indexes you plan to create

- The chosen time for their creation (before, during or after import)

- The justification for your indexing strategy

Import Process

Start by testing your approach with a small sample of data.

Monitor system resources during import and note:

- Memory consumption

- Processor usage

- Import time

- Any error messages or warnings

If you encounter memory constraints, adjust the import batch size or use the

--numInsertionWorkersoption ofmongoimport.

Verification and Validation

After import, verify the integrity of your data.

Include in your report:

- Queries used to verify data

- Total number of imported records

- Schema validation test results

- Query performance with your indexes

Summary and Reflection

Your report can include

- A chronological summary of completed steps

- Difficulties encountered and solutions found

- Observations on system performance

- A critical analysis of your approach

- Improvement suggestions for future import

- Lessons learned during this process

Errors and difficulties are part of the learning process.

Importing data in MongoDB

We have several options to load the data from a JSON file.

Either in mongosh with insertMany() or from the command line with mongoimport.

In mongosh with insertMany()

The following script

- loads the data from the

trees.jsonfile - Insert data into the

treescollection withinsertMany() - times the operation

// load the JSON data

const fs = require("fs");

const dataPath = "./trees_1k.json"

const treesData = JSON.parse(fs.readFileSync(dataPath, "utf8"));

// Insert data into the desired collection

let startTime = new Date()

db.trees.insertMany(treesData);

let endTime = new Date()

print(`Operation took ${endTime - startTime} milliseconds`)

Using mongoimport command line

mongoimport is usually the fastest option for large volumes.

By default mongoimport takes a ndjson file (one document per line ) as input.

But you can also use a JSON file (an array of documents) if you add the flag --jsonArray.

The overall syntax for mongoimport follows:

mongoimport --uri="mongodb+srv://<username>:<password>@<cluster-url>" \

--db <database_name> \

--collection <collection_name> \

--file <path to ndjson file>

Here are other interesting, self explanatory, flags that may come in handy:

--mode=[insert|upsert|merge|delete]--stopOnError--drop(drops the collection first )--stopOnError

In our context, here is a version of the command line, using the MONGO_ATLAS_URI environment variable and loading the JSON file trees_1k.json in the current folder.

time mongoimport --uri="${MONGO_ATLAS_URI}" \

--db treesdb \

--collection trees \

--jsonArray \

--file ./trees_1k.json

which results in

2024-12-13T11:41:45.941+0100 connected to: mongodb+srv://[**REDACTED**]@skatai.w932a.mongodb.net/

2024-12-13T11:41:48.942+0100 [########################] treesdb.trees 558KB/558KB (100.0%)

2024-12-13T11:41:52.087+0100 [########################] treesdb.trees 558KB/558KB (100.0%)

2024-12-13T11:41:52.087+0100 1000 document(s) imported successfully. 0 document(s) failed to import.

mongoimport --uri="${MONGO_ATLAS_URI}" --db treesdb --collection trees --fil 0.15s user 0.09s system 3% cpu 6.869 total

Summary

- fill in the spreadsheet

- install mongoDB on your local machine

- choose a large dataset, import it in MongoDB.

- explore the dataset

- write everything down as if that was the tutorial you would have loved to read to do this project.

- narrative behind the dataset and the relaedt applications

- issues you have encountered and how you've solved them

- write down the command lines you used

- log the outputs, errors, etc.

You will present your project on Friday Dec 13th. You have 5' to present your work. No slides, just walk us through.

Since we are many, it is very important you stick to 5' presentation time

And don't forget to have fun.